While enterprise investment in artificial intelligence development is growing and showing no signs of slowing, many AI development projects are stuck in pilot and experimentation stages, with significant roadblocks between their current status and a successful deployment. Getting these projects unstuck requires a comprehensive understanding of the model’s performance as it relates to end-user requirements, specific tasks, and safety for both user and organization. In short: an AI model evaluation plan that goes far beyond model evaluation metrics like benchmarking.

While traditional model evaluation wisdom involves creating a confusion matrix and calculating accuracy, precision, recall, and F1 scores accordingly, this standard approach ends up being insufficient for complex generative AI models that have hundreds of hyperparameters and are trained primarily on unstructured data — a far cry from the much more basic classification models that were developed and evaluated in previous decades.

While typical research and data science teams look at AI model evaluation as one step in the development process, the world’s leading AI developers approach it as an integral part of their training strategy, informing not just what data you need, but how it should be structured, how much data you need, and what training approach is best suited to fix the gaps at hand. In this blog, we’ll explore what this holistic approach to AI performance evaluation looks like for an enterprise AI project.

Leading AI development and data science teams ensure that machine learning model evaluation begins at the roadmapping stage of the AI journey, with an in-depth analysis of their end-users requirements and preferences. When AI developers skip this crucial step in the process, they risk investing in an application that doesn’t get adopted by its intended audience, risking low ROI or even project abandonment.

If our team is designing an agentic ML model to function as an assistant to investors at a finance organization, for example, we’ll need to know whether investors typically prefer to receive a history of their transactions as a table or a bulleted list. Surveying the end user for their requirements will also give us a clear picture of how your model should function, free of the assumptions and biases of those who won’t ultimately be using the deployed model.

While the specific information we collect in this AI model evaluation depends on the use case and end user, here are some questions that can help guide our research:

With the availability of powerful LLMs and computer vision models trained on internet-scale datasets, building a model from scratch is often unnecessary for most enterprise AI developers. Choosing the right generative AI foundation model for your use case — in other words, the model that already delivers results that are closest to your end users requirements — can make a significant difference in terms of AI model training time, costs, and performance.

To find the right model for your needs, we’ll need to compare multiple foundation models on their performance in areas and tasks related to your use case. It’s easy to make our choice by looking at high level benchmarks like the model’s accuracy, but drilling down and testing each model’s ability on specific tasks can help us get a much clearer picture of both machine learning model performance and the AI training journey ahead.

For example, if we’re testing LLMs for our investor assistant agentic use case, looking at the evaluation metrics such as the average quality of responses offers typical high-level benchmarking. However, these results are often subjective, show differences that aren’t statistically significant, and don’t offer insight into how well each model performs on the specific requests tied to the agentic use case.

Assessing these LLMs on how frequently they return answers with errors can offer a clearer view of how they compare against one another in a way that’s more in line with the use case. While the first AI performance evaluation showed Model 1 as a (very slight) frontrunner, digging deeper into error frequency shows that Model 2 actually delivers fewer errors by four percentage points. Still, we don’t know the type or severity of errors that are showing up for each of these models, so it’s difficult to create a training data strategy that will improve our agentic model’s performance.

To evaluate these generative AI foundation models for insights that can better inform our training data strategy, we’ll have to drill a bit deeper, getting data on the types of errors made by each model and the frequency of each type of error. By categorizing the model errors, we can see that while Model 2 has the lowest error rate, some of the types of errors it produces are quite dangerous for an investor assistant application, such as illegal activity and inappropriate evasive responses.

While spelling and grammar errors are easy to fix with low-effort training datasets, giving advice without hedge, assuming context, and illegal advice are all much more difficult to correct and will require more complex human data. In comparison, while Model 3 produces more errors, very few of them are prohibitively dangerous or complex.

From this more in-depth look at AI model performance, we can see that not only does Model 3 give us a starting point that’s closest to the performance level we’re looking to achieve with our agentic model; it also offers a clear and comparatively easy training path to get our desired outcome.

With a training data strategy that includes a low-cost set of synthetic data aimed toward correcting spelling and grammar errors and a small set of more complex human data to address the factual, compliance, context, and evasive response errors, we should be able to get our investor assistant AI application to production-level performance level for errors.

Once we’ve chosen our foundation model and established a starting point of performance, it’s time to dig even deeper into where the model falls short of desired results. For this analysis, we want to look at model predictions and responses to a variety of specific requests based on how end users will be leveraging the model. We’ll also want to do our best to prompt the model to “break,” or produce unwanted responses within the context of our use case.

This stage is also where we’ll want to evaluate our AI model predictions for safety — making sure we’re protecting enterprise data, shielding our end users from harmful content, and meeting compliance requirements for any current or future ethical AI and data policies.

Collecting evaluation metrics for our investor assistant use case, for example, would include:

With a thorough gap analysis that gives us a deep understanding of model performance with respect to our performance goals, we can develop a precise training data strategy that addresses our specific needs without wasting our team’s time, budget, or resources on training datasets that doesn’t yield results.

Implementing a holistic AI model evaluation strategy can help AI teams create effective, adoptable, and safe applications for their organizations — but the results that make this process most appealing for leadership is the reduction in required training data that can make AI development faster, easier, and much more cost-effective.

For example, one Invisible client set out to reduce a specific type of error in their LLM, where it frequently produced incorrect or otherwise harmful responses. The client hypothesized at the outset that it would take about 100,000 rows of human data to address this issue and significantly reduce the amount of harmful responses generated by the model.

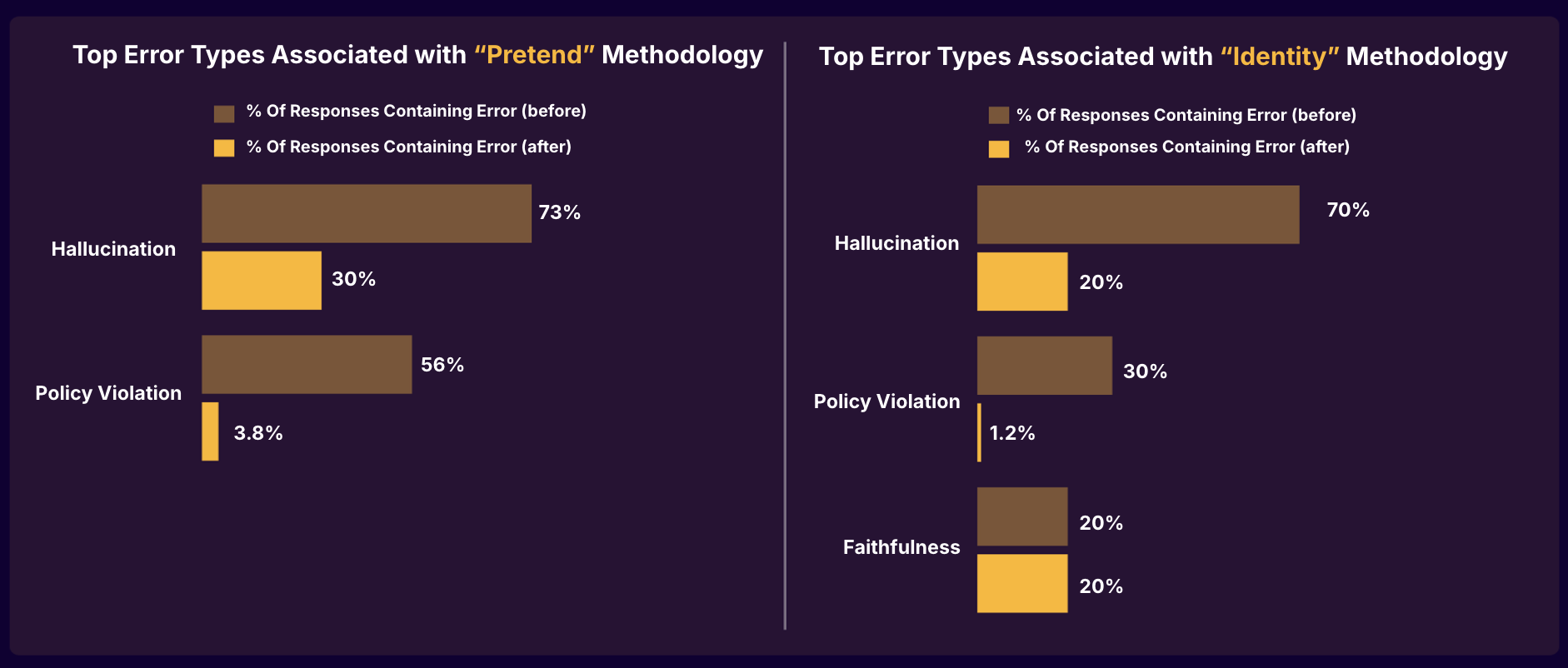

Invisible’s in-depth model evaluation methods, however, showed that the majority of hallucinations and harmful responses occurred when the LLM was prompted to pretend to be a person of authority or assume a specific professional identity. The Invisible team generated a training data set that paired accurate, helpful answers with similar “pretend” or identity assumption prompts.

Once ingested, the model’s frequency of hallucinations and policy violating responses dropped by 97%. In the end, the client’s harmful content reduction goals were met with only 4000 rows of new data — only a small fraction of the 100,000 rows they had originally planned.

Ready to put Invisible’s proven model evaluation methodology to work for your AI projects? Request a demo today to learn how we can help your team improve AI performance quickly, easily, and with less training data than you think you need.

New to AI model evaluation? Here’s a quick glimpse into some of the key terms, evaluation metrics, and methods you’ll come across in your research.

A confusion matrix is a sophisticated diagnostic tool in machine learning that provides a granular visualization of a classification model's performance. Think of it as a detailed report card that breaks down how accurately a classifier model predicts different classes. The matrix displays four critical outcomes: true positives (correctly identified positive instances), true negatives (correctly identified negative instances), false positives (negative instances incorrectly labeled as positive), and false negatives (positive instances incorrectly labeled as negative).

For example, in a medical screening model detecting a disease, the confusion matrix would reveal not just overall accuracy, but precisely where the model makes mistakes. Are false negatives (missed diagnoses) more prevalent, or are there many false positives (unnecessary patient anxiety)? This nuanced understanding helps data scientists refine models where precision could literally save lives.

From a confusion matrix, data scientists and machine learning engineers can calculate key classification metrics such as:

Cross-validation is a statistical technique designed to assess a model's performance by systematically partitioning data into training sets and testing subsets. Imagine dividing a large dataset into multiple smaller segments, like cutting a cake into equal slices. The most common approach, k-fold cross-validation, involves dividing the data into k equally sized segments.

In a 5-fold cross-validation, the model would be trained on 4/5 of the data and tested on the remaining 1/5, repeating this process five times with different training-testing combinations. This approach ensures that every data point gets a chance to be both in the training and testing set, providing a more comprehensive and reliable estimate of the model's predictive capabilities.

The diversity score is a metric designed to evaluate the variability and creativity of content from a generative AI model across multiple generations. It measures how much a generative model can produce unique outputs when given the same or similar prompts, preventing repetitive or overly predictable generations.

Consider this like testing an artist's range: can they create multiple distinct paintings when given a similar starting point? A high diversity score suggests the model can generate varied, creative content, while a low score indicates a tendency to reproduce similar outputs, potentially suggesting limited creative capacity.

The embedding space alignment is an advanced evaluation technique that assesses how well a generative model's outputs align with the semantic representations of input data in a high-dimensional vector space. This method involves comparing the vector representations of generated content with those of reference or training data.

Imagine this as a sophisticated mapping exercise where each piece of text is represented as a point in a complex, multi-dimensional space. Good alignment means generated content clusters near semantically similar reference points, indicating that the model has captured the underlying meaning and context of the training data.

Ground truth represents the absolute, real-world correct answer or authentic reference data against which we compare model predictions. Consider ground truth as the gold standard, the ultimate benchmark of accuracy that serves as the definitive baseline for evaluating model performance. In an image recognition model, ground truth is typically a dataset of images labeled by subject matter experts. In predictive modeling, ground truth represents the actual, observed outcomes, which we can compare to the model’s predictions.

The hallucination rate is a critical metric in generative AI model evaluation that measures the frequency of generating false, fabricated, or contextually incorrect information. Unlike traditional model evaluation metrics, hallucination rate specifically addresses the tendency of AI models to produce plausible-sounding but factually incorrect content.

In large language models (LLMs), hallucinations are like creative but unreliable storytellers who mix truth with fiction. A low hallucination rate indicates a model that stays close to factual information, while a high rate suggests the model frequently invents or distorts information. This metric is particularly crucial in domains requiring high factual accuracy, such as scientific research, medical information, or journalistic reporting.

This nuanced statistical measure is used to evaluate language-focused generative AI models, particularly in assessing how well a probabilistic model predicts a sample of text. Think of perplexity as a sophisticated "surprise meter" that quantifies how unexpected or complex a model finds a given sequence of words.

Lower perplexity indicates better model performance, suggesting the model more accurately predicts the next word in a sequence. Imagine a language model as a sophisticated prediction engine: a low perplexity score means it's consistently making educated, precise guesses about upcoming text. In practical terms, this translates to more coherent and contextually appropriate text generation.

Precision is a critical metric for classifier model evaluation. It measures the exactness of positive predictions by calculating the proportion of correctly identified positive instances among all positive predictions. It answers the question: "When the model predicts a positive result, how often is it actually correct?"

In a spam email detection system, high precision means that when the model flags an email as spam, it's very likely to be spam. A precision of 0.90 indicates that 90% of emails labeled as spam are genuinely unwanted, minimizing the risk of important emails being incorrectly filtered.

Recall is a performance metric assessing a classification model's ability to capture all relevant positive instances. It measures the proportion of actual positive cases correctly identified, answering the question: "Of all the positive instances that exist, how many did the model successfully detect?"

The ROC-AUC is a powerful performance metric that evaluates a classification model's discriminative capabilities by plotting its ability to distinguish between different classes. Imagine a graph that reveals how well a model can separate signals from noise across various classification thresholds.

The ROC curve tracks the trade-off between the true positive rate and the false positive rate. An AUC of 0.5 suggests the model performs no better than random guessing, while an AUC approaching 1.0 indicates exceptional classification performance. For example, in medical diagnostics, a high ROC-AUC might represent a screening test's ability to reliably distinguish between healthy and diseased patients.

A regression analysis reveals specific strengths and weaknesses in a machine learning model's predictive capabilities. It can also help data scientists better understand feature importance, potential overfitting or underfitting, and relationships between variables (which can be calculated with a linear regression). Some of the key metrics we can calculate from this analysis include:

A regression model is a predictive mathematical framework designed to estimate continuous numerical outcomes by exploring relationships between variables. Unlike classification models that assign discrete categories, regression models forecast precise numeric values, revealing intricate connections between input features and target variables.

Consider a house price prediction model. A regression model doesn't just categorize houses as "expensive" or "cheap," but predicts a specific price based on features like square footage, location, number of rooms, and local market trends. The model learns complex, non-linear relationships, understanding how multiple interconnected variables influence the final prediction.

Semantic coherence is an advanced evaluation method that assesses how well a LLM maintains logical and meaningful connections across generated text. It goes beyond simple grammatical correctness, examining whether the generated content demonstrates a deep understanding of context, maintains a consistent narrative thread, and produces logically connected ideas.

Imagine semantic coherence as a measure of the model's ability to tell a story that makes sense from beginning to end. A high semantic coherence score indicates that the generated text flows naturally, maintains a clear theme, and demonstrates a sophisticated understanding of language and context.